In 2020, Start Network undertook a number of consultations with communities that were supported by its funding. This included a large household survey in Senegal evaluating the quality and impact of assistance in anticipation of drought through ARC Replica, as well as the perceptions of people assisted in Pakistan, India and DRC as part of our due diligence pilot. Central to the planning of these evaluations was an intention to be as inclusive as possible by amplifying marginalised voices, and to ensure that the people interviewed were able to express themselves in their local language by hiring local consultants to conduct interviews.

Start Network's Evidence and Learning team has since been reflecting on how we can better undertake our evaluations of humanitarian responses. In particular, we have been thinking about how we can better gain consent to gather and share information, what criteria and perspectives we use to evaluate the quality of the response, and how this information is then shared with all relevant stakeholders. As we plan for additional consultations with communities this year, we would like to make three commitments to the people that help us evaluate the funding that is released through Start Network: (1) Clarity around purpose (2) Sharing our findings and (3) Measuring success differently.

Clarity around purpose

We would like to commit to carving out more time in the consultation process to explain the purpose of the interview and how the information will be used. Consultation time is always a challenge, especially when interviews need to be held remotely. However, we believe that ensuring the contributor fully understands why we are doing the interview and how it will be used better validates their consent and will also likely lead to more relevant information, especially to open-ended questions.

Sharing our findings

We would like to commit to exploring ways that we can share the findings of our evaluation and research with the people who shared their experiences with us. Working with our local consultants and the agencies implementing responses, we will test information sharing approaches that agencies use already for behavioural messaging such as text messages or flyers/posters.

Measuring success differently

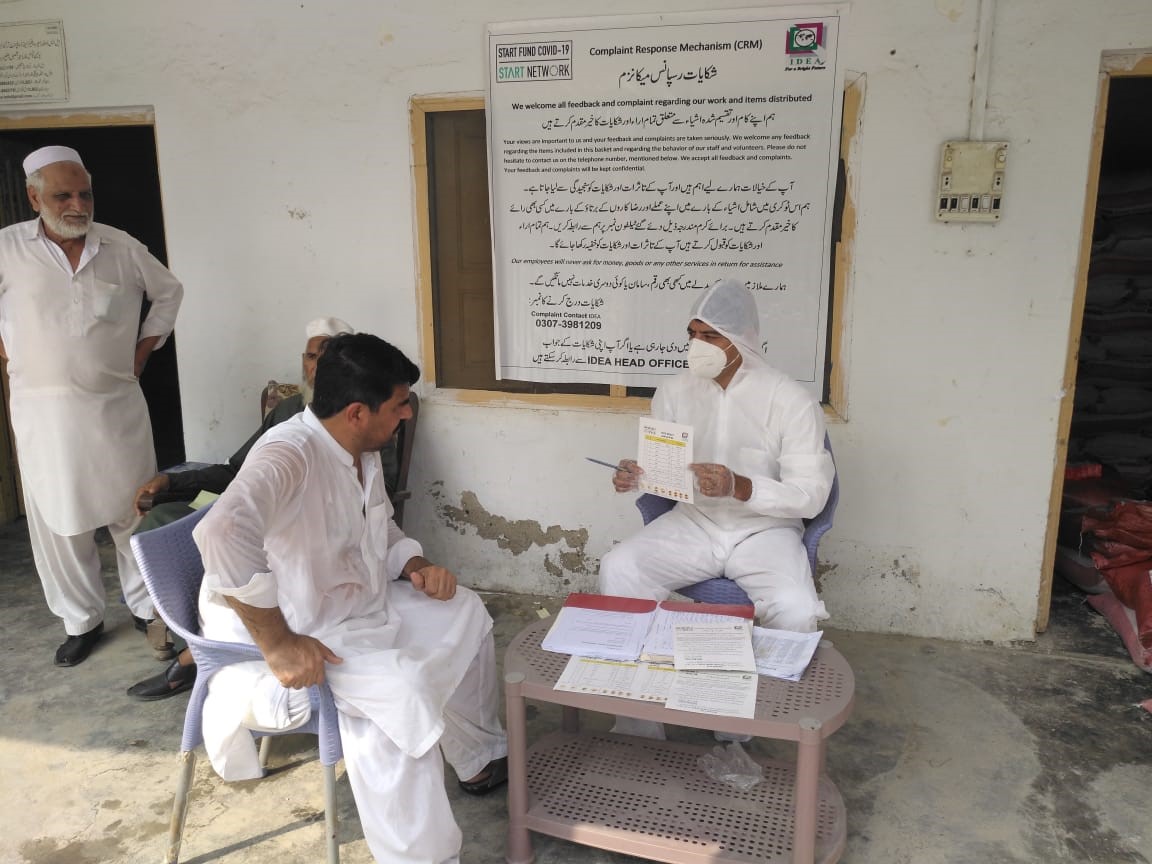

Traditional approaches of evaluating the success of humanitarian programmes are driven by Western ideas around accountability and views on what makes a good success indicator. We tend to focus on indicators such as whether items arrived at the right time and were of good quality, whether those assisted were well-informed about the complaint procedure, and whether anyone felt mistreated. However, some of our recent evaluations have suggested that although people’s knowledge of formal complaints mechanisms can be low, this is not seen as a major failure; it was either not seen as a priority or people had other ways to voice concerns. Therefore we would like to explore local perceptions of programme success and ways to measure these so that our accountability really lies with the people that have been affected by crises.

Of course all of these commitments require us to invest more time and resources in our evaluations and community consultations, but we believe that this will ultimately lead to better quality and more relevant information on which to build our evidence and learning. We will also be intentionally thinking about how we express ourselves in these consultations. This includes avoiding jargon and acronyms, and testing how well expressions in English—our native language—are translated into the many diverse languages of those that we will be listening to.